Microsoft Copilot: What CISOs Need to Know

Enterprise companies are rapidly testing and adopting AI assistants like Copilot for Microsoft 365 and ChatGPT. In many cases, the choice is simple: use AI assistants to unlock growth and efficiency, now and in the future, or risk losing ground to competition.

However, with their benefits come risks. CISOs and security teams are tasked with ensuring AI assistants are rolled out and leveraged securely, weighing the risks and rewards. Privacy issues, sensitive data exposure, and compliance challenges are major concerns teams must consider.

Before a company deploys Copilot for Microsoft 365 or other Copilot tools from Microsoft, robust AI governance must be in place.

CISOs and security teams need to consider:

- The security, compliance, and legal risks of Microsoft Copilot.

- Prioritizing Data Access Governance (DAG) as part of their Enterprise AI program.

- Taking concrete steps to ensure a secure Copilot rollout.

What is Copilot for Microsoft 365?

Microsoft Copilot is a Generative AI chatbot developed in collaboration with OpenAI that launched in February 2023.

Copilot for Microsoft 365 is an enterprise-grade version of Microsoft’s chatbot, offered to companies that already utilize Microsoft 365 and subscribe to the add-on.

The AI work assistant seamlessly integrates with Microsoft 365 apps including Teams, Word, Outlook, PowerPoint, Excel, Meet, and more. It helps employees streamline tasks, from staying engaged in meetings to summarizing research.

Copilot can search, gather, and surface data from all Microsoft 365 documents, emails, calendars, presentations, contacts, and meetings. It also analyzes employee behavior when using Microsoft apps and offers tips and shortcuts for greater productivity.

What are Copilot’s information security risks?

Copilot’s four major security risks are oversharing and bad permissions, privacy concerns, insider risks, and manipulation by threat actors.

“If you provide data to a public LLM or Copilot through Microsoft, you are taking a big risk with your data. There are a lot of privacy and “grandma’s attic” issues with these models. You may discover information and data that was secured by obscurity.”

—IT/ Security leader via Gartner Peer Learning

Here are the four main security risks to consider:

1. Oversharing and bad permissions

- Oversharing puts sensitive data at risk: A 2023 study found companies have an average of 802,000 files with “business-critical data” exposed due to oversharing.

- Unauthorized users accessing sensitive data: Oversharing grants internal and external users access to confidential information, leading to the risk of data leakage.

- Microsoft Copilot amplifies data exposure risk: The AI work assistant speeds up and simplifies information access for employees, increasing the potential for exposure. It seamlessly surfaces documents and information to users, aggravating previously hidden sharing misconfigurations.

- Overly broad access permissions for employees: Employees often have more access to company data than they need or are aware of. Sharing Microsoft documents and SharePoint sites is easy, granting access to anyone with a link or by adding a group or collaborator.

- Lingering access permissions: Permissions are rarely revoked when access is no longer required, leaving data vulnerable for months or even years.

2. Privacy concerns

- Copilot accesses your organization’s data: This includes emails, chats, and files employees have permission to access through Microsoft Graph.

- Relevant suggestions, privacy concerns: Copilot’s suggestions are relevant and based on an employee’s data, but Copilot’s responses could expose sensitive information due to oversharing.

- Problems with accuracy: Microsoft acknowledges that Copilot’s responses are not 100% factually accurate. The company recommends carefully reviewing all Copilot suggestions before using or sharing them.

- Data collection for improvement: Microsoft collects some anonymized and encrypted data on interactions employees have with it for diagnostics and improvements. This data might contain fragments of employee personal or organizational information.

- Data sharing by default: Employees must choose not to share diagnostic data with Microsoft by adjusting privacy settings in Microsoft 365 apps.

3. Insider risks

- Accidental data leaks through Copilot misuse: Employees might be unaware of the full scope of data they can access, leading Copilot to reveal confidential information unintentionally.

- Malicious insider threats:

-

- Prompt engineering: Insiders could use specific prompts to trick Copilot into revealing unauthorized information.

- Software/Model compromise: In rare cases, insiders might exploit vulnerabilities or introduce malicious code to compromise Copilot’s security.

4. External threats

- Compromised Copilot accounts expose company data: Attackers who gain access to a Copilot-enabled account could access sensitive data when querying the tool. They could leak responses that contain confidential information; for example, trade secrets or financial data.

- Model inversion attacks: All AI-powered systems are vulnerable to a type of attack called model inversion. In this attack, threat actors exploit the model itself to either manipulate its behavior or steal information from it.

What are Copilot’s compliance and legal risks?

Implementing Copilot for Microsoft 365 across an organization creates several legal and compliance risks that must be managed. The risks include confidentiality, copyright infringement, and discrimination claims.

1. Confidentiality

- Confidentiality concerns: The biggest legal concern when using Copilot is confidentiality. While Microsoft promises to keep code inputs and outputs confidential, there’s a catch that companies who handle regulated data must be aware of. Microsoft reserves the right to access Copilot activity (prompts and responses) for 30 days. Although this access is for abuse monitoring purposes only, it creates the risk of your company’s confidential data being viewed.

- Option to opt out: For users handling sensitive or legally regulated data, Microsoft offers the option to apply for an exemption from abuse monitoring access. However, this adds an extra step that requires approval from Microsoft.

- Two important caveats: There are two other situations where an employee’s Copilot data might venture outside Microsoft’s secure environment:

-

-

-

- Referencing public web content: If a user enables Copilot to use Bing for information retrieval, company data might be transmitted outside the Microsoft 365 service boundary.

- Using plugins: Plugins can enhance Copilot’s functionality but could also require sharing data with third parties beyond Microsoft’s control.

-

-

2. Copyright infringement

- Copyright concerns: One major concern security leaders mentioned was copyright infringement. There’s a risk that Copilot might unintentionally share copyrighted information it encountered during training. This could include content scraped from the internet without permission. The resulting output could include copyright infringement.

- Microsoft coverage has limits: While Microsoft offers some copyright infringement coverage for Copilot, it has limitations.

- Talk to Legal and Compliance: Consulting with company legal counsel or the compliance team is recommended to understand the scope of Microsoft’s coverage and potential legal risks related to copyright issues.

3. Bias and discrimination claims

- Be aware of bias: It’s important to consider bias and discrimination when using Copilot, especially in sensitive areas like employment and public accommodation settings.

- Non-discrimination laws still apply: Laws, regulations, and court rulings have established protections against bias in various areas, including situations like job candidate screening. If your company uses Copilot to perform these types of tasks, it should conform to those laws. For examples of non-discrimination laws that pertain to AI, visit here.

Why companies must prioritize Data Access Governance when using AI chatbots

Prioritizing Data Access Governance (DAG) has become imperative for organizations deploying AI chatbots like Copilot for Microsoft 365. DAG focuses on managing and monitoring who has access to specific data within an organization and establishing clear protocols for how this data is classified and handled.

Within platforms like SharePoint, OneDrive, and Teams, it’s not uncommon for hundreds of thousands—or even tens of millions—of company files to be shared and accessed by employees. Employees typically have access to sensitive data without a clear understanding of its confidentiality level, and often without even realizing they have access to the data in the first place. This oversight can pose significant security risks, particularly as AI chatbots require extensive access to organizational data to be effective.

Before giving Copilot the keys to the kingdom – all Microsoft documents, emails, calendars, presentations, contacts, meetings, and more – implementing a robust Data Access Governance program is paramount.

This approach minimizes potential security breaches and ensures that your organization’s data handling practices comply with relevant regulations and standards, safeguarding valuable company information.

Deploying Microsoft Copilot: What CISOs need to know

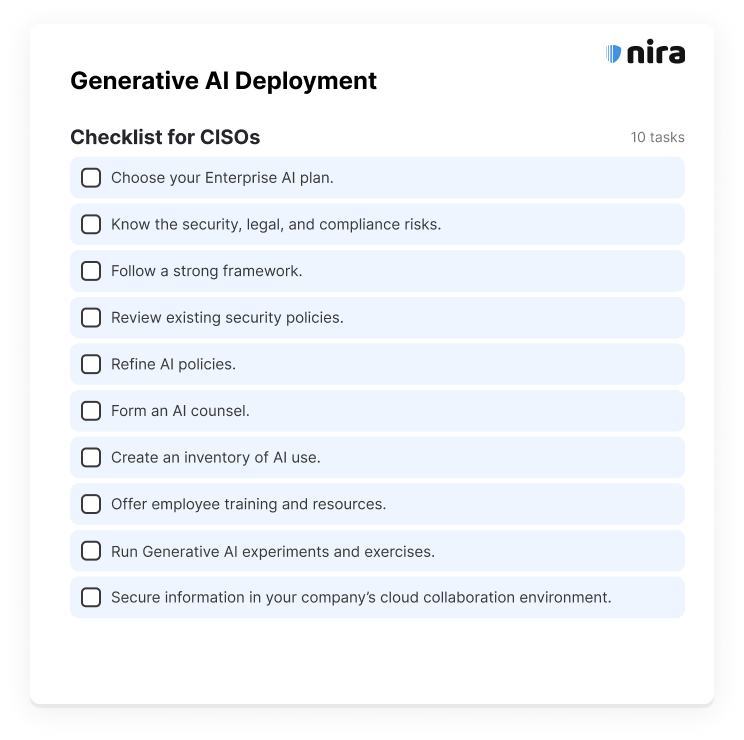

Here are the 10 key steps for CISOs who plan to use Microsoft Copilot in their organizations.

1. Choose a Copilot.

-

- There are currently seven versions of Copilots for Work: Copilot for Microsoft 365; Copilot for Sales; Copilot for Service; Copilot Studio; Copilot for Security; Copilot for Finance; and Copilot for Azure. Here’s an analysis of their prices, pros, and cons.

2. Know the risks.

-

- Deeply understand and assess the major security risks of using Generative AI tools before rolling them out.

3. Follow a strong framework.

-

- Monitoring standards bodies like NIST are crucial to keep organizations aligned with a strong security framework. Frameworks built specifically for AI like the AI trust, risk, and security management (AI TRiSM) framework are useful to consult.

4. Review existing security policies.

-

- Have teams review company policies to ensure sensitive data is not being overshared. This includes managing SharePoint sites, having proper access permissions, and adding sensitivity labels to classify confidential data.

5. Refine AI policies.

-

- Teams should create or revise company policies for using Generative AI. Refine AI policies to include Microsoft Copilot, and update any other policies (i.e., data classification) that are required.

6. Form an AI counsel.

-

- CISOs and security teams should not be the only or primary stakeholders dealing with the repercussions of AI assistant rollouts and usage. “Work with organizational counterparts who have active interests in GenAI, such as those in legal, compliance, and lines of business to formulate user policies, training, and guidance,” Gartner advises.

7. Create an inventory of AI use.

-

- Teams should develop an inventory of existing AI use, and stay aware of the use of Shadow IT. Even if you ban LLMs and AI chatbot usage at your company, employees will find ways and workarounds to use them.

8. Offer employee training and resources.

-

-

- Lay out guidance for employees and offer them training and resources for using Copilot. Make sure employees understand the risks associated with these tools, as well as how to secure access permissions and company files.

-

9. Run AI experiments and exercises.

-

- Before implementing AI assistants, your team can run tabletop exercises for threat scenarios related to the use of Microsoft Copilot and other Enterprise AI tools. Red teaming exercises, where teams attempt to attack AI models and bypass restrictions, give companies a better idea of how to respond to potential incidents.

10. Secure information in your company’s Microsoft 365 environment.

-

- The best Copilot rollout cleans up oversharing before employees begin using the tool. Your team should evaluate your company’s entire Microsoft 365 environment, including how sensitive data is shared in SharePoint, OneDrive, and Teams.

Generative AI work assistants are going to be used by employees, whether officially sanctioned or not. It’s imperative for CISOs and security teams to fully comprehend the security, legal, and compliance risks associated with these technologies. From there, they must implement robust measures to reduce these risks.

Failing to address these risks opens the door to data breaches, manipulated content, and sensitive information exposure. Proactive security is crucial to unlocking the full potential of Generative AI while keeping an organization safe.

Nira helps companies with AI governance by securing company data in Microsoft SharePoint, OneDrive, Teams, and more. Security teams use Nira to address AI governance risks like oversharing, bad permissions, and insider threats, in a few clicks.

With Nira, your team can:

- Safeguard sensitive files with real-time access control.

- Automate security policies for risk, governance, and compliance management.

- Efficiently investigate and resolve security incidents.

- Protect millions of company files at once, without issues.

Get started with a free Microsoft 365 Security Risk Assessment. Reach out here to learn more.