How to Reduce Information Security Risks When Deploying Copilot for Microsoft 365

The mass adoption of generative artificial intelligence has outpaced security measures as companies rush to develop and release AI-powered products.

Microsoft launched Copilot, Google released Gemini, and OpenAI has made headlines with ChatGPT. Organizations are eager to test AI assistants for company tasks, from creating content to writing code.

But developing technology comes with new security risks, and companies using AI products have concerns.

According to Cisco’s Data Privacy Benchmark Study, 91% of organizations acknowledge the need to reassure their customers about using generative AI.

Respondents—security professionals worldwide—were worried that generative AI could hurt the organization’s legal rights and intellectual property (69%) and that the data entered may be shared publicly or with competitors (68%).

As more employees use these tools, what do you need to know about AI assistants to keep your company data safe?

We’ll explore the risks of using Microsoft Copilot, explain how to reduce them, and help you decide if you want to implement the tool in your company.

The four key Microsoft Copilot risks we’ll cover are:

- Oversharing and inadequate permissions

- Insider risks

- External threat actors

- Privacy concerns

What is Copilot for Microsoft 365?

Microsoft Copilot is a generative AI chatbot developed by Microsoft in collaboration with OpenAI that launched on February 7, 2023.

Microsoft Copilot’s paid enterprise and business versions: Copilot for Microsoft 365 is available to companies using Microsoft 365 who choose to purchase the upgrade. These organizations must have a product license for Microsoft 365 Business Standard, Business Premium, E3, E5, or Office 365 E3 or E5.

Copilot for Microsoft 365 promises to help end users with work-related tasks, from summarizing research to staying engaged in meetings. The tool integrates with a variety of Microsoft apps including Teams, Outlook, Word, Excel, PowerPoint, and more.

Microsoft calls Copilot for 365 a “new AI-employee alliance” that will lessen workloads and boost productivity, and organizations are already thinking about the pros and cons of adding the tool.

According to a poll conducted by Nira, 49% of IT and Security leaders said they already use or plan to use Microsoft Copilot in their companies.

However, not all companies were on board with Microsoft’s “everyday AI companion.” The remaining respondents (39%) said they would not use Copilot, and 12% of leaders said their company did not allow it.

Companies still have concerns about giving Copilot for 365 free rein in their organizations.

Security professionals are primarily worried about the tool’s ability to search and gather data from all Microsoft 365 documents, emails, calendars, presentations, contacts, and more.

Microsoft Copilot Security: Concerns, Risks, and Vulnerabilities

Imagine the huge amount of data your company has built up from every email, meeting, chat, and document in your company’s Microsoft 365 tenant.

Copilot for Microsoft 365 can analyze it all.

And, if not handled securely, sensitive company data could be exposed.

One major issue is if an employee has access to a sensitive company document, without realizing it. This could have overarching security implications if overshared sensitive data gets into the wrong hands.

We’ll look deeper at the four biggest security risks of using Copilot below.

Copilot Risk 1: Oversharing and inadequate permissions

The problem with Copilot for Microsoft 365 is it can leverage all the data that an employee has access to: if the employee has access to sensitive data, then so will Copilot.

One issue we see in our work at Nira is oversharing data in organizations.

According to a 2023 report, “16% of an organization’s business-critical data is overshared.” That adds up: companies face an average of 802,000 files containing “business-critical data” at risk due to oversharing.

The results become more worrying when we realize that 83% of “at-risk files” were overshared with users and groups within the organization. Files that may be confidential or overly sensitive could be exposed to the entire company.

Overall, more than “15% of all business-critical files are at risk from oversharing, erroneous access permissions, and inappropriate classification,” according to the study.

Because of oversharing, a company’s sensitive files could be seen by users who do not have the proper access permissions, leading to a risk of data exposure. Using Microsoft Copilot—a tool that makes information easier for employees to access than ever—exacerbates data exposure risk significantly.

Employees often have more access to company information than they need or even realize.

Sharing Microsoft documents, for example, is as easy as adding collaborators or opening a link that anyone in the company or on the internet can access. And, permissions are rarely cleaned up once people no longer need access.

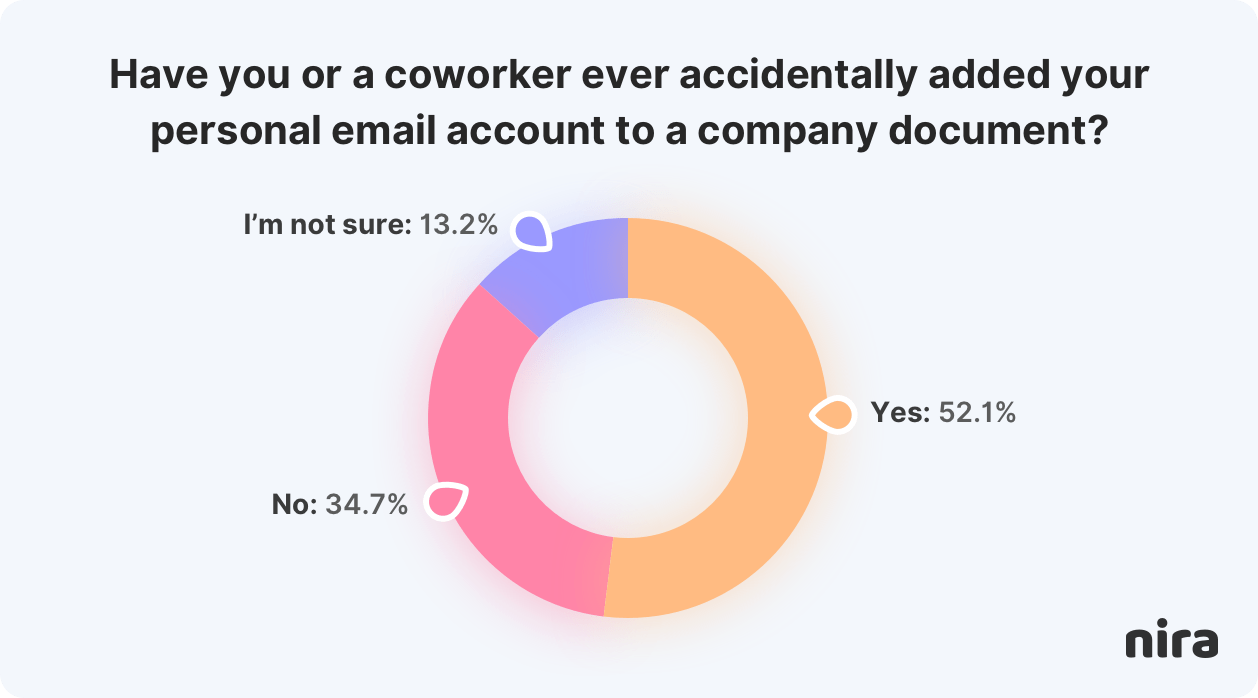

For example, a Nira survey found that more than half of employees said they or a coworker accidentally added their personal email accounts to company documents.

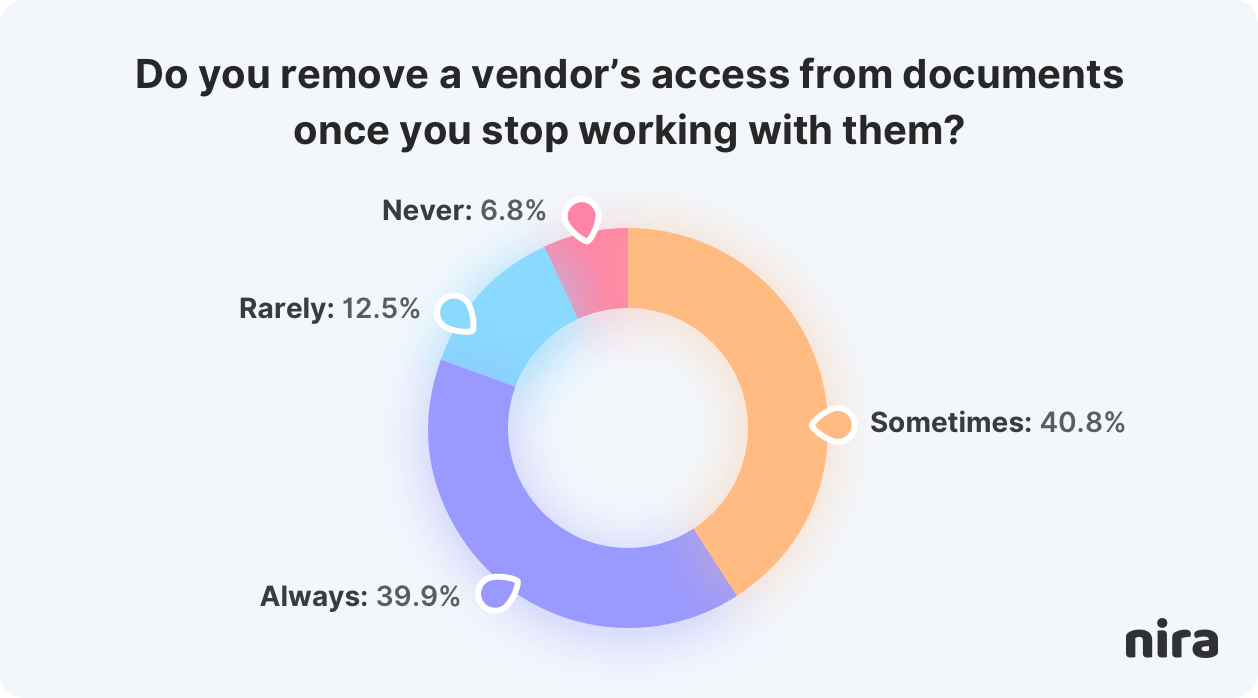

And, although 40% of employees said they cleaned up vendor access on documents once an engagement was over, the rest (60%) only did clean up sometimes, rarely, or never.

To reduce risks when using Copilot, companies must first ensure their data is not being overshared internally and externally.

Copilot Risk 2: Insider risks

Employees misusing Copilot, either by accident or on purpose, could lead to data breaches or potential leaks.

Data breaches caused by unauthorized access, what we call access risks, are happening more frequently as cloud collaboration grows. Breaches are costly, time-consuming to fix, and damage customer relationships.

Nearly 80 percent of access-risk incidents are caused by employee accidents. One issue is employees don’t always realize all the data they have access to.

For example, Nick Ross of T-Minus365 asked Copilot to provide a list of all the files and chats that referenced a specific topic. Nick was surprised when the tool surfaced “a citation of a document I didn’t know existed and a Teams Channel that I didn’t know I was a member of.”

In Nick’s example, the company data that Copilot found was not sensitive; it becomes an issue when employees accidentally surface confidential or sensitive information they should not.

And although most incidents tend to be accidental, malicious exfiltration can happen. Insiders could purposefully use prompt engineering techniques to ask questions that would give them information they shouldn’t have.

In extreme scenarios, insiders could compromise the security of the Copilot software or its underlying model by exploiting vulnerabilities or introducing malicious code.

Copilot Risk 3: External threat actors

What may become more common are threat actors compromising an account with AI-enabled features and trying to exfiltrate sensitive data through the use of AI.

For example, an attacker gains access to an account with Copilot for 365 and attempts to use it to access sensitive data from Microsoft Graph.

Or a threat actor leaks a response to a prompt that contains confidential information. For example, using a prompt that asks for a financial report or leaking a response that reveals a trade secret.

If attackers gain access to a Copilot-enabled account, company data is at risk.

It’s vital to have the right permissions, security features, and data protection policies set up in your company’s Microsoft 356 tenant (i.e., OneDrive, SharePoint) to avoid Copilot misuse.

Copilot Risk 4: Privacy concerns

Legal or privacy challenges may arise from using Copilot-generated content, including intellectual property rights and compliance issues.

We’ve seen multiple lawsuits in the news revolving around generative AI tools. OpenAI is currently facing copyright and legal challenges from the New York Times, several digital media outlets, and various authors, comedians, and radio hosts.

To avoid similar issues with Copilot for Microsoft 365, organizations must handle privacy implications carefully for compliance, legal, and ethical reasons. This can include changing Copilot’s privacy settings or sanitizing information that Copilot is given access to.

According to Microsoft, Copilot for Microsoft 356 can access your organization’s data through Microsoft Graph, including emails, chats, and documents that you have permission to access.

The suggestions from Microsoft Copilot are relevant and based on your data, but this “also means that Microsoft Copilot may expose sensitive or confidential information in its responses.”

Microsoft warns that you should always check the content that Microsoft Copilot creates before you use or share it with anyone else.

Although the tool does not keep the prompts that you send or receive, it does collect some data about how you interact with it for improvement and diagnostic purposes.

This data is encrypted and anonymized, but it could still contain parts of your personal or organizational information, Microsoft cautions.

Users can choose not to share this data with Microsoft by changing their privacy settings in Microsoft 365 apps.

Copilot for Microsoft 365: How to prepare your company

Step 1: Understand risks in your company’s Microsoft 365 tenant

The key to a data security strategy is understanding your company’s Microsoft 365 environment and being aware of its risks.

On one hand, Copilot only uses data from a current user’s Microsoft 365 tenant. This means that if a user is a guest in another tenant or your company uses “cross-tenant sync,” then that data should be safe.

However, your company’s Microsoft 365 tenant acts as a “central identity provider” for your organization with a set of accounts, groups, and policies. Permissions and sharing of resources happen across this tenant as your employees collaborate quickly.

To keep this environment safe, you must understand your company’s risks and make sure your organization’s tenant is secure.

Microsoft suggests several steps for configuring your organization’s tenant, which you may be familiar with if you’ve worked with a company that uses Microsoft.

However, with the high frequency of oversharing and bad permissioning, it can’t hurt to review these steps and get a better overview of the environment you need to protect.

Step 2: Ensure your company has the right permissions, access controls, and security policies

Keeping your company secure when using Copilot for Microsoft 365 goes back to having the right permissions in the first place.

Microsoft puts it this way:

“It’s important that you’re using the permission models available in Microsoft 365 services, such as SharePoint, to help ensure the right users or groups have the right access to the right content within your organization.”

Download the Security Audit Checklist for Microsoft 365 and Google Drive

Many security professionals adhere to the idea of “least privilege” when it comes to permissions. This includes who has access to company documents, presentations, administrator accounts, etc. You want only to give employees the permissions they truly need.

To keep your tenant secure, you must identify and understand these permissions and make sure they are up-to-date.

This goes along with the principle of access control. You must control access to your company’s data and make sure your company has the proper security policies in place.

To protect company files in Microsoft 365 and Google Workspace using access control and automated security policies, visit here.

Step 3: Educate your employees and give them the tools they need

Employees can be your best resource when keeping data safe, but without the proper training and tooling, they are at a disadvantage. Most employees are strapped for time (one of the reasons they are using AI assistants) and do not have excess resources for access control.

It often falls on IT and Security teams to educate employees about security risks and make sure they follow proper procedures. But, these teams are often swamped themselves.

It’s important to provide resources for employees so that they understand the guidelines and risks when using Copilot for Microsoft 365.

Overall, employees need to understand their permissions and what files and data they have access to in Microsoft 365, especially when it comes to sensitive information.

To learn how employees can get visibility and control over access to SharePoint, OneDrive, and Teams documents they own, visit here.

Copilot for Microsoft 365 Security Plan: How to reduce risks

Having a plan for protecting your data in Microsoft 365 is a vital resource before enabling Copilot. When creating your plan, think about these questions:

- What does your company consider to be sensitive data?

- Where is that sensitive data found in your company’s Microsoft 365 tenant?

- How is your company’s data being shared internally?

- How is your company’s data being shared externally?

- How are employees using links for sharing internally and externally?

- Who has access to your company’s data, and what are their permissions?

- Does your company use data classification tools like Microsoft Purview?

- How are you applying labels in your organization?

- Has your company incorporated Microsoft’s Data Loss Prevention (DLP) methods or another DLP solution?

- What are your company’s current access controls for users and groups?

- Have you updated your company’s access policies based on the risks you’ve identified?

- Have you created a plan for your company’s data and access control lifecycle?

- Does your company have data retention policies?

- Do you have ways to educate employees about access risks and permissions?

- Do your employees have a way to clean up permission misconfigurations?

- If employees were to use Microsoft Copilot, how could that affect your company?

- What information might be exposed if Copilot were rolled out?

Having a concrete plan for securing access to Microsoft 365 will help with your company’s overall security response and make it easier to deploy generative AI tools with confidence.

Stop oversharing and fix document permissions, in seconds

Companies must be mindful of access risks when enabling Copilot for Microsoft 365 in their organization. It’s vital to be aware of how sensitive data is handled and who has access to it.

Permissions should be updated, and employees must be given the right tools and training to keep their data safe.

A Data Access Governance system can solve the problems of oversharing and inaccurate document permissions in tools like Google Workspace, Microsoft OneDrive, Sharepoint, and Teams. It automates compliance with security policies for your company and remediates risks from unauthorized access in seconds.

To learn how to keep your company’s data safe when using Copilot for Microsoft 365 and other AI tools, reach out here.