How to Write the Perfect Postmortem Report

So you were just assigned the task of writing a postmortem report.

Or maybe you need a quick template that you can assign someone on your team.

Either way, what does a great postmortem report look like?

I’ve got you covered.

Across several companies, my teams have produced hundreds of postmortem reports over the years. I’ve tweaked my report structure to the point that folks can easily produce a high-quality report even if it’s their first time.

I’m going to break down the exact report that I use with my teams. Feel free to copy as much of it as you like.

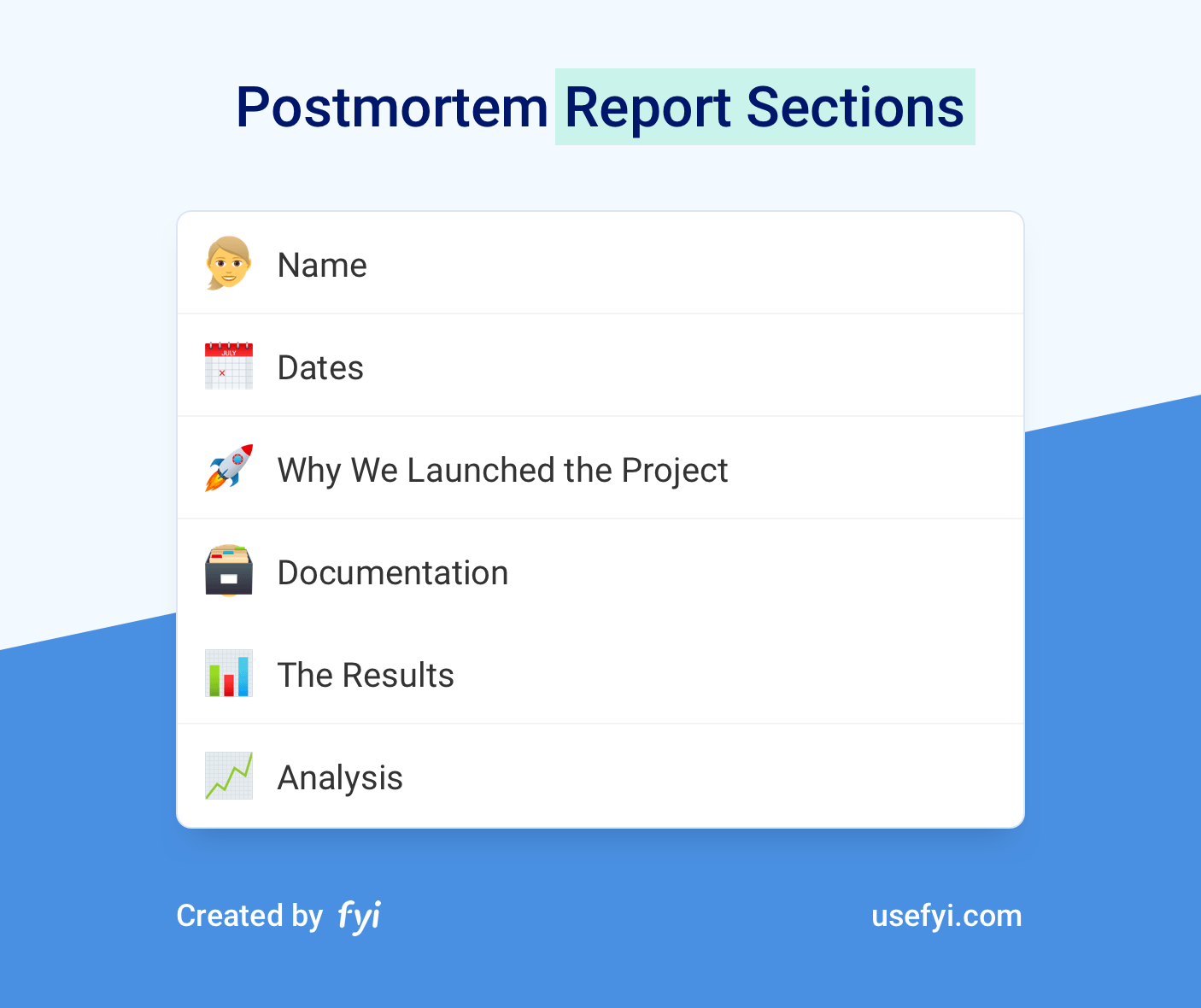

My reports always include these items in this order:

- Name

- Dates

- Why We Launched the Project

- Documentation

- The Results

- Analysis

Let’s go through each of them.

Name of the Project

This one’s pretty easy, give the project a name and put it right at the top of the report. If It’s a file, I use the name of the project as the file name. Or if I’m saving a page in a wiki, I’ll use the title as the name of the page.

Usually, I have the team save all their postmortem reports in the same place so there’s no need to add “Postmortem Report” to the title on every document.

There are a few naming tricks that I’ve discovered over the years.

Include Codenames

If your project used a codename or placeholder name of some kind, include all those variants at the top of the report. For myself, I’ve found that I always forget which codenames applied to which project. Then digging through old documents gets super confusing. For example, I picked the codename “Project Kumquat” one time but if someone showed me a document on Project Kumquat today, I wouldn’t be able to tell you which project that was about. I’ve completely forgotten.

Even if you use a generic placeholder for a project before it gets an official public name, include those placeholders right at the top of the postmortem report.

Make the Title as Clear as Possible

Try to make the title as clear and literal as possible. The whole point of your postmortems is to help people understand what has already been done in the past and learn from those projects. The clearer you can make the title, the more likely it is that people will find the postmortem report that they’re looking for.

Date of the Project

Include any and all relevant dates. After a year or so, I’m not even able to remember which quarter certain projects launched. I’ve found dates in postmortem reports to be lifesavers on several occasions when I needed to look up exactly when a project went live.

Depending on the project, different dates will be relevant:

- The dates something was live

- Any testing or early access dates before a full launch

- Dates that work started or ended if they’re different from the launch dates

For anything that went live, definitely include those dates. Use your best judgment on whether to include other dates.

Why We Launched the Project

What was the original goal of the project? What were we trying to accomplish?

Definitely write this down. Have you ever reviewed a project that took 6 months worth of work from a full team and asked everyone why they’re doing it only to get a bunch of blank stares? I have. I’ve also given those blank stares on a few occasions.

I’m always shocked at how easy it is for me to forget why major projects were approved.

That’s why we write it down. The team will forget.

Basically, this is your hypothesis of the entire project. Here’s an easy format to use:

If we do X project, we expect Y to happen because of Z.

Why is this project worth all the effort? More revenue? Customer satisfaction? Internal efficiency? Happier employees? Whatever it is, write down the impact you expected to achieve and why you thought the project would have that impact.

Document the Project

Now it’s time to include as much documentation as possible on the project itself.

For the most part, you should be able to avoid creating anything from scratch for this section. Your main goal is to find every working document that was used during the project and include it in this section. Either dump all the files into a folder or link to everything from your postmortem report.

Err on the side of including too much, not too little. I’ve never been mad that a postmortem report included links for too many documents. I have been frustrated when I was trying to diagnose something and there weren’t enough links for old projects.

I do like to include lots of screenshots or photos for anything that went live. If I launched a new product with an online campaign, I’d take screenshots of all the emails, ads, and all the other marketing material that we shipped. Or if I was documenting the results from a sponsor booth at a conference, I’d take a bunch of photos of the booth and the conference.

One note here: take screenshots or photos of the actual live experience. Some folks will try to take screenshots of a demo, staging environment, or the final deliverable before it goes live. That’s not good enough for me. A lot can happen between the final version and the version that actually launches. Bugs and mistakes go live at the last step all the time.

I wish I could say that I rarely caught problems when reviewing the live assets. Unfortunately, problems get shipped all the time that totally invalidate the results of the project. Get eyes on the live version and document it in your report. You’ll get a much more accurate read on what’s happening. When a project misses the mark, this could explain why.

The Results of the Project

Now we start to review the impact of the project.

I focus mostly on quantitative results and include qualitative feedback to help add color if needed.

Start with your KPIs for the project, the specific metrics that you’re measuring the project against.

In some cases, this section is pretty easy. Let’s say you launched a faster website to increase your site speed. All you need to include is your site speed before the change and your site speed after. Super easy.

In other cases, your results reveal larger problems in how projects are being prioritized and approved. Watch for these problems during the results section.

No one knows which metrics to use

This is probably the most common problem. A project gets selected with a loosely defined goal. Employee and customer happiness are common examples. Or audience engagement.

Everyone agrees that customer satisfaction is important but no one takes the extra step to decide how customer satisfaction will be measured. Then at the end of the project, teams are left scrambling looking for metrics that indicate the goal was accomplished.

Whenever I run into this problem, I make a note that I need to push for clarity on how success will be measured when the project is kicked off. If there’s no agreement in the beginning, there won’t be agreement when everyone has to decide if the project was a success.

Granted, some things are an enormous pain to be measured. Or a project has a larger purpose than hitting a given metric. Sometimes you need to do something solely because it’s the right thing to do. In these cases, I call this out ahead of time and then document that decision within the results section.

The data becomes overwhelming

For some projects, the opposite problem occurs. There are so many metrics to choose from that it feels like wading through a swamp.

I’ve run into this problem a lot with online marketing campaigns. With the analytics that we have on everything, it’s easy to dump several dozen metrics into the postmortem report. If I include data on every metric, no one will know which metrics actually matter. Then it’s nearly impossible to decide if the campaign was a success or not.

As a general rule, I like to limit this section to 5 metrics or less. I’ll only include more if everyone is perfectly clear on which metrics determine success and which metrics are “supporting metrics” for extra context. Another option is to build a data dump of everything in another document or spreadsheet. Then link to all the auxiliary data, treat it as an appendix. That allows you to include everything as a reference while also keeping everyone focused on the few metrics that matter.

Poorly defined or selected metrics

Even though I wish there was an easier way, just about every metric has some ambiguity around it.

Metrics have multiple definitions and they can usually be pulled from different locations. Teams need to use the same definitions and pull metrics from the same “source of truth” for there to be any consistency.

Take website traffic for example. Are we using sessions, users, or pageviews? And which tool are we pulling the metric from? Every tool will have a slightly different number.

Earlier in my career, I used to be overly concerned about which metric was the most accurate. These days, I just want to make sure the entire team is using the same metric consistently. That means using the same definition and pulling it from the same source of truth.

This tends to be a problem with folks doing postmortem reports for the first time. Once they know where to grab certain metrics, mistakes rarely happen. So when I have someone on my team doing a postmortem report for the first time, I do a quick check to make sure they pulled all the data from the right location.

If you find that folks are using metrics inconsistently across the team, I’ve also had success with an internal metric glossary. It’s a single document that outlines the exact definition for core metrics and where they can be found.

Project Analysis

By far, the analysis section is the most important part of the postmortem report. Make sure to get this part of the report right.

It’s so important that we put together a deep-dive on postmortem analysis here.

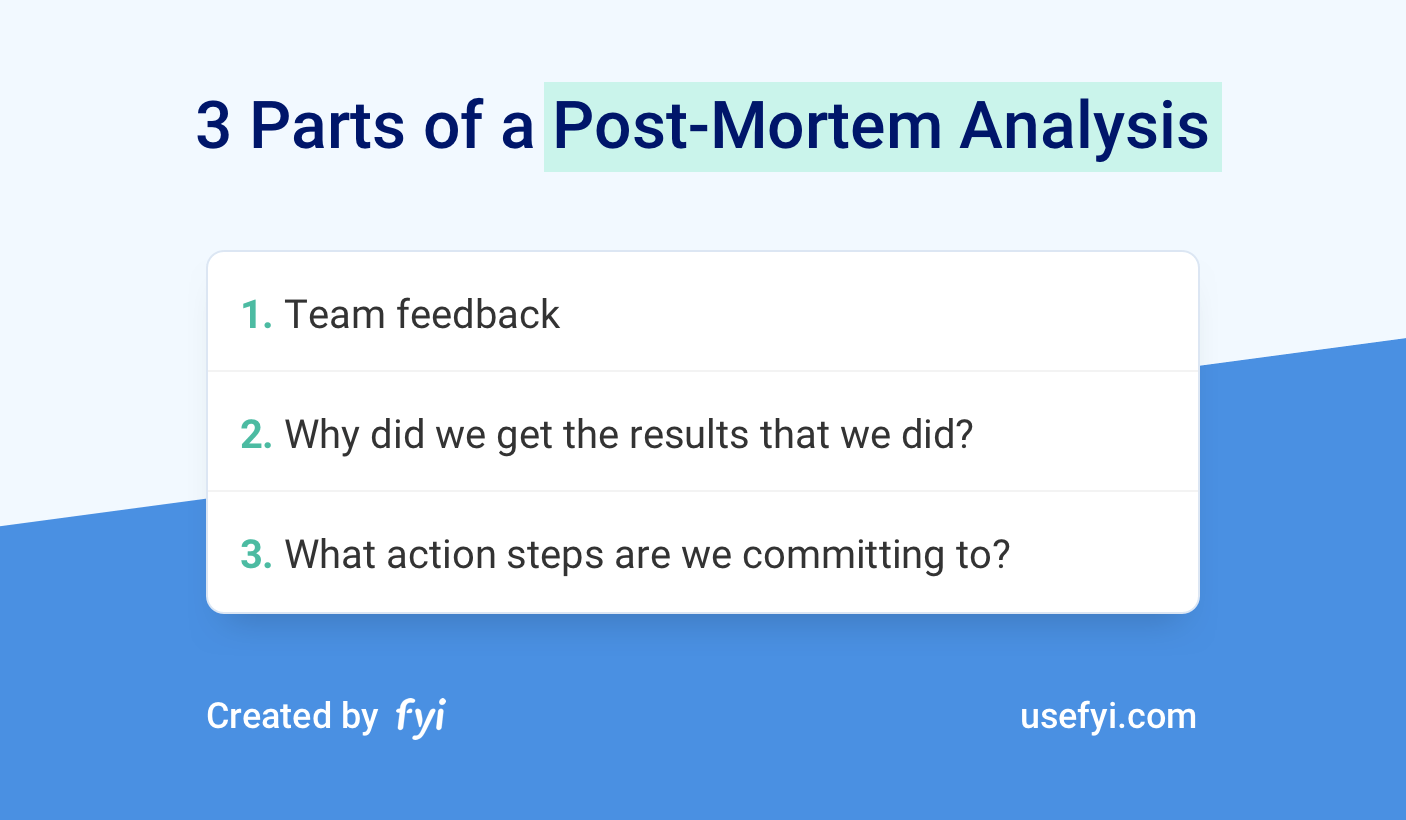

Here’s a quick overview on the three items that I add to my analysis section.

Feedback from the Team

For cross-team projects, you really want an area for any team to air their grievances. This gives you a chance to fix problems in your processes permanently. It also keeps everyone bought-in over the long term by giving them a voice. And teams can point out data that was missed during the rest of the postmortem report.

I ask a representative from each team involved to add feedback from their team to this section. Multiple people will add to this section of the report.

Why did we get the results that we did?

I prefer to hold a meeting and fill this section out as a group. It allows us to have a deep discussion on the root success or failure of the projects instead of accepting surface-level explanations.

During the meeting, I’ll push the team to think deeper about the real reasons for the outcome of the project using open-ended questions.

Once we get to some solid explanations, I’ll ask someone on the call to write up a quick summary for this section.

What are our action steps and who owns each step?

One of my management rules is that if everyone owns something, no one owns it.

For every postmortem report, I document which action steps we’re committing to and the individual that owns that action step. This maintains a good tempo of iteration and keeps postmortems from turning into busy-work.

This can be easily done right after the discussion on root causes. Ask the team which action steps make sense and who wants to be accountable for each one. For tasks that don’t have volunteers, I’ll assign them to the most appropriate person at the meeting.